Commercializing Autonomous Science

Generation 4 Bio AI

Autonomous science is a theme us at Compound have been obsessed with for years (recent post and past futuristic writing). Recently my colleague Mackenzie published an extensive piece on how automated science breakthroughs are traversing logic and experimental planning, experimental execution, all leading towards a world where we can do experiments largely in silico.

Multiple tailwinds

A theme from the piece is that now more than ever, tech advancements are pushing the boundaries of each piece of lab automation. Reinforcement learning (RL) breakthroughs mean that SOTA models like DeepSeek and TinyZero can be built at a fraction of the cost and time as historic foundation models, further democratizing the world’s collective knowledge. General robotic arm advancements mean that instead of the shilled $30k arm for experiments, scientists can pay $500. Biology on chips means increased throughput and parallelization of experiments AND changes the form of experiments from liquid-handling based to chip based. The form of experiments we feel is an especially underrated aspect of lab advances because all lab equipment to date is made for us sloppy humans to interact with. But if there’s one thing that’s clear it’s that a first principles, microfluidics native system for automating science is likely the unlock for where we are in biology (the Enchippening discusses this at length).

A few examples of bio-chip or bio-microfluids companies at various stages of development:

Ultima Genomics makes a circular wafer to reduce reagent cost and increase surface area

Pumpkinseed Bio’s tech is built on silicon wafers, with 3 million sensors per centimeter square allowing for higher throughput and fidelity proteomics

Parallel Fluidics is a fab for microfluidics chips for biological applications

Avery Digital uses CMOS chips for DNA synthesis and bioengineering

Layering technology unlocks

What we haven’t seen but are compelled by is a PaperQA-type system paired with a chip-native platform for doing biology. Many autonomous science works (like Coscientist and A-Lab) to date circulate more around historic liquid handling systems. But, where better hypothesis generation and reasoning combine with mega-throughput is where we can start seeing the curve of autonomous science create faster timescales for discovery and optimization. This is a hard needle to thread because the bar for teams building here is excellence at ML, chips or microfluidics, and biology. However teams and technologists able to navigate this complexity will be able to capture an asymmetric possibility for scientific discovery.

Although this sounds like blythe techno-optimism, the pieces of such a platform are in front of us, with the main step-change being PhD level intelligence for synthesizing and outputting scientific knowledge.

https://x.com/andrewwhite01/status/1881219134937350250

What are the first use cases?

The closed-loop companies and scientific labs we’ve seen are primarily in the chemistry and materials fields and include a wide range of companies including but not limited to:

A-Lab, a Deepmind and Berkeley collaboration to synthesize new inorganic materials

A-Lab spinout Radical AI applies physics and ML-based modeling with a self-driving lab to create functional materials. Use-cases include scalable magnets for nuclear fusion, 2D dielectrics for next generation CMOS, UHT ceramics for space heatshields and more

Chemify which plans, synthesizes and executes new chemical space for therapeutics.

ChemOS2.0 which treats the lab as an operating system for closed loop operations and discovers organic laser molecules

Coscientist platform which integrates GPT-designed experiments for execution of optimization of palladium-catalysed reaction

Intrepid Labs uses autonomous systems for improved drug formulations

PostEra - verticalized medicinal chemistry engine for the development and optimization of small molecules

From ChemOS2.0

While there are many different areas one could explore, we are particularly enthused by innovation on the chemical and materials side, particularly around product-focused development (like PostEra). While companies like Intrepid Labs are exciting as they could change the capital efficiency of formulation optimization, an important step in therapeutic development; If we extrapolate this further, we could see many parts of discovery and development optimized in closed-loop settings. This could create a dynamic where contract research organizations (CROs), built from self-driving labs, could become venture scale businesses from undercutting human-run lab prices and improving margins through automation and generalizable learnings. We see particular potential around:

Chemical reaction optimization and catalyst discovery

Active pharmaceutical ingredient (API) polymorph and stability testing

Reaction scale-up and process parameter optimization

Optimization of other modalities such as peptide, protein, delivery vectors, and cells themselves (though less work to date has been on this level)

Next order edge cases

While there has been less work on fully autonomous protein discovery and optimization, we are seeing indications that this gap is closing. (On the optimization side, Adaptyv Bio and Triplebar could theoretically add agents to their process for ab initio discovery or optimization.) What’s more is the massive and nebulous potential for fully autonomous labs to address problems that can’t be solved with current technology or safety constraints. This framing requires us to think about edge-cases of biotechnology where humans by definition couldn’t work at all or at the scales (time and manpower) necessary to achieve results. Some examples include:

AI-driven response to bioterrorism - self-learning AI defense system which scans circulating and historic outbreaks, analyzes pathogens, their evolutionary trajectory and synthesize drugs or vaccines against them with emergency biofoundries (also could be quarantine zone biolab).

Underground / sealed autonomous labs for extreme biosafety - Deep-underground AI-run bio-labs that experiment with high-risk pathogens, prions (especially with devastating contamination prevalence in prion labs), extinct viruses resurrected from permafrost or synthetic life forms, ensuring that no human is directly exposed.

AI-contained geoengineering experiments - A closed-system AI biosphere where synthetic ecologies are tested before geoengineering-scale deployment.

AI-directed evolution for novel life forms: AI-driven iterative evolution of synthetic cells inside sealed robotic labs, where AI suggests mutations, runs in silico evolution, and then physically tests them with no human input.

Rogue AI or accidental bio-evolution monitoring - Self-auditing autonomous science platforms that monitor for emergent behaviors in AI-designed biological systems with AI kill-switches that detect when an experiment is veering into unpredictable evolutionary territory and auto-terminate it.

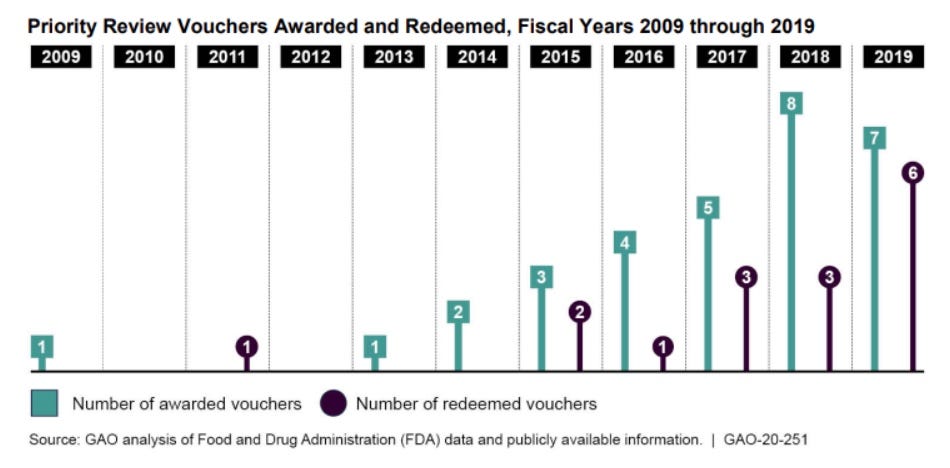

While these scenarios may seem niche or futuristic, that is precisely the point: autonomous science should fundamentally enable capabilities beyond current human limitations. Ideally autonomous science does something we (by definition) can’t. The biosecurity use-cases we find particularly compelling commercially as we have seen examples of companies able to build therapeutics for pandemics and tropical disease which have been granted priority review vouchers. These vouchers can be sold for $60-$350M in exchange for the expedited timescales of development. Other funding unlocks are possible when use-cases are of national security level of importance (e.g. seed-stage HOPO Therapeutics $226M award from BARDA; 4J Therapeutics secures $313M contract from BARDA).

Image from GAO.

Edge cases to all cases

The ultimate vision of autonomous science isn’t to be used in dangerous edge-cases, it’s to redefine science itself. By pushing beyond the constraints of traditional CRO models, autonomous labs can unlock unprecedented efficiencies in everything from catalyst discovery to process scale-up, and even in realms too risky for human intervention. This isn’t merely about cost savings or faster turnaround times; it’s about evolving our entire approach to discovery to Generation 3 biological discovery.

As Michael Bronstein describes in The Road to Biology 2.0 Will Pass Through Black-Box Data, Generation 0 data was collected by human scientists for human scientists and was analyzed using human-designed algorithms based on their best understanding of the problem; Generation 1 companies apply ML to existing data modalities ineffectively (Atomwise, Exscientia); Generation 2 produce data in a systematic way by scaling existing experimental technologies (Recursion, Insitro); Generation 3 is the co-development of ML with novel biological data acquisition technologies (Enveda, VantAI, A-Alpha Bio).

From The Road to Biology 2.0 Will Pass Through Black-Box Data by Michael Bronstein

What we’re proposing seems to be a step beyond what Bronstein described as Generation 3 in that the discovery and optimization are fully agentic and autonomous in nature. We dare to call this instantiation a Generation 4 Bio AI. As we integrate advanced ML models, microfluidics, and robotics into our experimental workflows, we edge closer to a future where autonomous science doesn’t just support human-led research but transforms it and pushes it into new modalities and use-cases.

Every breakthrough achieved in these closed-loop systems not only accelerates product development but also generates new data, new models, and new ways of thinking—creating a virtuous cycle of innovation, eventually leading to data which allows for high fidelity in silico experimentation.

Thanks to Mike and Mackenzie for your help on this piece!

Very exciting... I have a two-year plan to sell my company and move into biofabrication. Already working on "Touching Jewelry and Fashion" using haptics.

Great overview and perspective! I thought I knew the space but quite a few new things in here to me